发现问题

上周从每天的日志统计日报发现,突然部分存储服务器开始大量出现类似以下的日志:

Dec 10 15:13:59 myserver kernel: [17475246.696258] sdal: sdal1 sdal9

Dec 10 15:13:59 myserver kernel: sdal: sdal1 sdal9

Dec 10 15:14:00 myserver kernel: [17475246.882323] sdam: sdam1 sdam9

Dec 10 15:14:00 myserver kernel: sdam: sdam1 sdam9

Dec 10 15:14:00 myserver kernel: [17475246.948613] sdan: sdan1 sdan9

Dec 10 15:14:00 myserver kernel: sdan: sdan1 sdan9

Dec 10 15:14:00 myserver kernel: [17475247.044814] sdao: sdao1 sdao9

Dec 10 15:14:00 myserver kernel: sdao: sdao1 sdao9

Dec 10 15:14:00 myserver kernel: [17475247.157436] sdap: sdap1 sdap9

Dec 10 15:14:00 myserver kernel: sdap: sdap1 sdap9

Dec 10 15:14:00 myserver kernel: [17475247.223108] sdbe: sdbe1 sdbe9

Dec 10 15:14:00 myserver kernel: sdbe: sdbe1 sdbe9

Dec 10 15:14:00 myserver zed: eid=14661 class=vdev.bad_ashift pool_guid=0x84383CC58AC07C5C vdev_path=/dev/disk/by-id/scsi-35000cca273704d68-part1

Dec 10 15:14:00 myserver zed: eid=14662 class=vdev.bad_ashift pool_guid=0x84383CC58AC07C5C vdev_path=/dev/disk/by-id/scsi-35000cca2736fab64-part1

Dec 10 15:14:00 myserver zed: eid=14663 class=vdev.bad_ashift pool_guid=0x84383CC58AC07C5C vdev_path=/dev/disk/by-id/scsi-35000cca2737014b0-part1

Dec 10 15:14:00 myserver kernel: [17475247.316825] sdbf: sdbf1 sdbf9

Dec 10 15:14:00 myserver kernel: sdbf: sdbf1 sdbf9

Dec 10 15:14:00 myserver zed: eid=14664 class=vdev.bad_ashift pool_guid=0x84383CC58AC07C5C vdev_path=/dev/sdab1

Dec 10 15:14:00 myserver zed: eid=14665 class=vdev.bad_ashift pool_guid=0x84383CC58AC07C5C vdev_path=/dev/disk/by-id/scsi-35000cca2736efbe0-part1

Dec 10 15:14:00 myserver zed: eid=14666 class=vdev.bad_ashift pool_guid=0x84383CC58AC07C5C vdev_path=/dev/disk/by-id/scsi-35000cca273704c34-part1

Dec 10 15:14:00 myserver zed: eid=14667 class=vdev.bad_ashift pool_guid=0x84383CC58AC07C5C vdev_path=/dev/disk/by-id/scsi-35000cca2737051c8-part1

Dec 10 15:14:00 myserver zed: eid=14668 class=vdev.bad_ashift pool_guid=0x84383CC58AC07C5C vdev_path=/dev/disk/by-id/scsi-35000cca273704fac-part1

Dec 10 15:14:00 myserver zed: eid=14669 class=vdev.bad_ashift pool_guid=0x84383CC58AC07C5C vdev_path=/dev/disk/by-id/scsi-35000cca27370a2bc-part1

zpool events日志如下:

# zpool events -v

TIME CLASS

Dec 13 2019 09:57:53.616088963 ereport.fs.zfs.vdev.bad_ashift

class = "ereport.fs.zfs.vdev.bad_ashift"

ena = 0xfeed7bfb2ca01401

detector = (embedded nvlist)

version = 0x0

scheme = "zfs"

pool = 0x176af123486292aa

vdev = 0x7a3902d1006a3b0f

(end detector)

pool = "ost_98"

pool_guid = 0x176af123486292aa

pool_state = 0x0

pool_context = 0x0

pool_failmode = "wait"

vdev_guid = 0x7a3902d1006a3b0f

vdev_type = "disk"

vdev_path = "/dev/disk/by-id/scsi-35000cca27366b0e8-part1"

vdev_devid = "scsi-35000cca27366b0e8-part1"

vdev_enc_sysfs_path = "/sys/class/enclosure/0:0:0:0/7"

vdev_ashift = 0xc

vdev_complete_ts = 0x56feed6a4da4d

vdev_delta_ts = 0xce0f5

vdev_read_errors = 0x0

vdev_write_errors = 0x0

vdev_cksum_errors = 0x0

parent_guid = 0x945a8813ce9c944d

parent_type = "raidz"

vdev_spare_paths =

vdev_spare_guids =

prev_state = 0x0

time = 0x5df2f021 0x24b8c583

eid = 0x183aa1

# zpool events

TIME CLASS

Dec 13 2019 09:57:53.616088963 ereport.fs.zfs.vdev.bad_ashift

Dec 13 2019 09:57:53.616088963 ereport.fs.zfs.vdev.bad_ashift

Dec 13 2019 09:57:53.616088963 ereport.fs.zfs.vdev.bad_ashift

Dec 13 2019 09:57:53.616088963 ereport.fs.zfs.vdev.bad_ashift

Dec 13 2019 09:57:53.616088963 ereport.fs.zfs.vdev.bad_ashift

Dec 13 2019 09:57:53.616088963 ereport.fs.zfs.vdev.bad_ashift

单单从日志来看,其实并不容易发现是什么问题。但对比不同的服务器日志后,很快就能发现大量日志的出现是从Dec 10 15:00:00时间左右开始发生的,因此判断当时应该做了一些变更。从审计日志中查看当时执行的记录,发现是修改了raid状态检查的脚本。执行脚本马上就会出现类似的日志,证明脚本的执行是起因。进一步分析脚本,确定是mvlci这个Marvell公司的raid卡状态检测工具造成的。

zfs-zed

vdev.bad_ashift错误是在vdev_open()的时候产生的,可能会造成性能问题,但对数据安全性应该不会有太大影响,参考:What happens in ZFS when you have 4K sector disks in an ashift=9 vdev。代码注释中也说只是抛出event而不直接error让pool不可用,可见并不算严重错误。

/* zfs-0.7.9 - include/sys/fm/fs/zfs.h */

#define FM_EREPORT_ZFS_DEVICE_BAD_ASHIFT "vdev.bad_ashift"

/* zfs-0.7.9 - module/zfs/vdev.c */

/*

* Prepare a virtual device for access.

*/

int

vdev_open(vdev_t *vd)

{

/* ... skipped ... */

/*

* Detect if the alignment requirement has increased.

* We don't want to make the pool unavailable, just

* post an event instead.

*/

if (ashift > vd->vdev_top->vdev_ashift &&

vd->vdev_ops->vdev_op_leaf) {

zfs_ereport_post(FM_EREPORT_ZFS_DEVICE_BAD_ASHIFT,

spa, vd, NULL, 0, 0);

}

/* ... skipped ... */

}

我们可以直接把zfs-zed服务关掉,这样再也不用收到vdev.bad_ashift错误了。但zed有错吗?为什么要zed躺枪?而且就算没有vdev.bad_ashift日志,仍然会有大量的磁盘分区日志。

以其关掉zfs-zed服务,还不如把Marvell raid状态检测的时间间隔拉长一些,5分钟一次os盘的检测有点太频繁了。

跟怎么减少日志相比,我更感兴趣的是,这些日志是怎么来的。接下来继续分析。

不正常的uevent

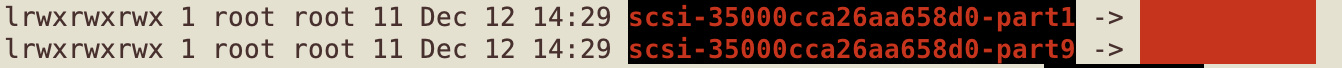

刚才说过,vdev.bad_ashift错误是在vdev_open()的时候产生的,但vdev_open()不应该经常调用的。通过观察,偶然发现磁盘链接消失。zpool使用的是scsi-xxxxxx这样的设备路径,当设备有变化的时候,vdev_open()被调用也就不奇怪了。

为了证实系统确实有进行设备路径link的删除操作,用udevadm monitor去观察,很快就实锤。

monitor will print the received events for:

UDEV - the event which udev sends out after rule processing

KERNEL - the kernel uevent

KERNEL[1719771.836799] remove /devices/pci0000:b2/0000:b2:00.0/0000:b3:00.0/host0/port-0:0/expander-0:0/port-0:0:1/expander-0:1/port-0:1:0/end_device-0:1:0/target0:0:1/0:0:1:0/block/sda/sda1 (block)

KERNEL[1719771.836814] remove /devices/pci0000:b2/0000:b2:00.0/0000:b3:00.0/host0/port-0:0/expander-0:0/port-0:0:1/expander-0:1/port-0:1:0/end_device-0:1:0/target0:0:1/0:0:1:0/block/sda/sda9 (block)

KERNEL[1719771.878747] change /devices/pci0000:b2/0000:b2:00.0/0000:b3:00.0/host0/port-0:0/expander-0:0/port-0:0:1/expander-0:1/port-0:1:0/end_device-0:1:0/target0:0:1/0:0:1:0/block/sda (block)

KERNEL[1719771.878826] add /devices/pci0000:b2/0000:b2:00.0/0000:b3:00.0/host0/port-0:0/expander-0:0/port-0:0:1/expander-0:1/port-0:1:0/end_device-0:1:0/target0:0:1/0:0:1:0/block/sda/sda1 (block)

KERNEL[1719771.878877] add /devices/pci0000:b2/0000:b2:00.0/0000:b3:00.0/host0/port-0:0/expander-0:0/port-0:0:1/expander-0:1/port-0:1:0/end_device-0:1:0/target0:0:1/0:0:1:0/block/sda/sda9 (block)

设备的分区信息被remove之后,又很快重新add回来,这是什么骚操作?

uevent追踪

我们知道,问题的源头是来自于mvcli,用strace命令去查看mvcli的操作过程,发现它除了做一些很无聊的事情外,并没有什么remove/add/change设备或类似的操作。

从udevadm monitor的信息可以看出,remove/add操作是来自于内核,所以可以看看内核是怎么调用uevent发送的。搜索一下kernel的uevent发送机制,发现是通过kobject_uevent来发uevent的,参考stackoverflow的回答。用systemtap来尝试捕获信息,下面是systemtap脚本:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | #!/usr/bin/stap

global count = 0

probe kernel.function("kobject_uevent").call {

printf("---------------------\n");

print_backtrace();

printf("---------------------\n");

count ++;

if (count > 10) {

exit();

}

}

|

得到下面的结果:

---------------------

0xffffffff84979c10 : kobject_uevent+0x0/0x10 [kernel]

0xffffffff84aa3813 : device_del+0x193/0x210 [kernel]

0xffffffff8495bde8 : delete_partition+0x48/0x80 [kernel]

0xffffffff8495be66 : drop_partitions.isra.10.part.11+0x46/0x80 [kernel]

0xffffffff8495c3a6 : rescan_partitions+0x76/0x2c0 [kernel]

0xffffffff84956bc9 : __blkdev_reread_part+0x69/0x70 [kernel]

0xffffffff84956bf3 : blkdev_reread_part+0x23/0x40 [kernel]

0xffffffff849577ed : blkdev_ioctl+0x4ed/0xa20 [kernel]

0xffffffff84880771 : block_ioctl+0x41/0x50 [kernel]

0xffffffff84856210 : do_vfs_ioctl+0x3a0/0x5a0 [kernel]

0xffffffff848564b1 : sys_ioctl+0xa1/0xc0 [kernel]

0xffffffff84d74ddb : system_call_fastpath+0x22/0x27 [kernel]

0x7f1c051fb8d7

---------------------

可以看到kobject_uevent是在ioctl这个系统调用之后产生的。回过头来再看mvcli的strace日志,没有什么特别的ioctl调用。调查一度陷入困境。

uevent在用户态一般是由udevd来监听处理的,在RHEL7,这个服务是systemd-udevd,属于systemd的一部分。尝试去strace systemd-udevd进程,果然发现有趣的调用。

1220 16:57:33.236947 open("/dev/sda", O_RDONLY|O_NONBLOCK|O_NOFOLLOW|O_CLOEXEC) = 12 <0.000021>

1220 16:57:33.236994 flock(12, LOCK_EX|LOCK_NB) = 0 <0.000012>

1220 16:57:33.237026 ioctl(12, BLKRRPART) = 0 <0.032882>

1220 16:57:33.269962 close(12) = 0 <0.000038>

参考man sd(4),BLKRRPART是强制重读scsi硬盘的硬盘分区操作。

BLKRRPART

Forces a reread of the SCSI disk partition tables. No parame‐

ter is needed.

那么,为什么systemd-udevd要做这个操作呢?下载systemd的代码进行分析。还好一搜BLKRRPART就出来了,是在synthesize_change这个函数里执行的:

/* systemd-219 - src/udev/udevd.c */

static int synthesize_change(struct udev_device *dev) {

/* ... skipped ... */

/*

* Try to re-read the partition table. This only succeeds if

* none of the devices is busy. The kernel returns 0 if no

* partition table is found, and we will not get an event for

* the disk.

*/

fd = open(udev_device_get_devnode(dev), O_RDONLY|O_CLOEXEC|O_NOFOLLOW|O_NONBLOCK);

if (fd >= 0) {

r = flock(fd, LOCK_EX|LOCK_NB);

if (r >= 0)

r = ioctl(fd, BLKRRPART, 0);

close(fd);

if (r >= 0)

part_table_read = true;

}

/* ... skipped ... */

}

而synthesize_change是在什么情况下被调用的?可以看下面的代码,当event的watch mask是IN_CLOSE_WRITE的时候,就会执行。

/* systemd-219 - src/udev/udevd.c */

static int handle_inotify(struct udev *udev) {

union inotify_event_buffer buffer;

struct inotify_event *e;

ssize_t l;

l = read(fd_inotify, &buffer, sizeof(buffer));

if (l < 0) {

if (errno == EAGAIN || errno == EINTR)

return 0;

return log_error_errno(errno, "Failed to read inotify fd: %m");

}

FOREACH_INOTIFY_EVENT(e, buffer, l) {

struct udev_device *dev;

dev = udev_watch_lookup(udev, e->wd);

if (!dev)

continue;

log_debug("inotify event: %x for %s", e->mask, udev_device_get_devnode(dev));

if (e->mask & IN_CLOSE_WRITE)

synthesize_change(dev);

else if (e->mask & IN_IGNORED)

udev_watch_end(udev, dev);

udev_device_unref(dev);

}

参考man inotify(7),当文件被可写的方式打开,文件关闭的时候会产生IN_CLOSE_WRITE。

IN_CLOSE_WRITE (+)

File opened for writing was closed.

结合man中的例子学习一下:

Examples

Suppose an application is watching the directory dir and the file

dir/myfile for all events. The examples below show some events that

will be generated for these two objects.

fd = open("dir/myfile", O_RDWR);

Generates IN_OPEN events for both dir and dir/myfile.

read(fd, buf, count);

Generates IN_ACCESS events for both dir and dir/myfile.

write(fd, buf, count);

Generates IN_MODIFY events for both dir and dir/myfile.

fchmod(fd, mode);

Generates IN_ATTRIB events for both dir and dir/myfile.

close(fd);

Generates IN_CLOSE_WRITE events for both dir and

dir/myfile.

从以上信息,可以知道/dev/sda产生了IN_CLOSE_WRITE事件,导致systemd-udevd执行重读分区表,触发remove/add之类的操作。那到底是什么进程以可写的方式打开并关闭了设备?从mvcli命令的strace结果,可以找到下面的信息

110725 14:58:49.280185 open("/dev/sda", O_RDWR|O_NONBLOCK) = 5 <0.000015>

110725 14:58:49.280222 ioctl(5, SG_IO, {'S', SG_DXFER_FROM_DEV, cmd[6]=[12, 01, 80, 00, fc, 00], mx_sb_len=32, iovec_count=0, dxfer_len=252, timeout=60000, flags=0, data[12]=[00, 80, 00, 08, 32, 59, 48, 4d, 31, 38, 58, 44], status=00, masked_status=00, sb[0]=[], host_status=0, driver_status=0, resid=240, duration=0, info=0}) = 0 <0.000149>

110725 14:58:49.280425 close(5) = 0 <0.000021>

ioctl执行了scsi命令cmd[6]=[12, 01, 80, 00, fc, 00],这是scsi INQUIRY命令,查询page code 80h(Unit Serial Number),详细可以参考seagate的Interface manuals。命令只是取一下硬盘的信息,但以O_RDWR的方式打开设备,会触发systemd-udevd重读分区表操作,这是问题所在。

为了进一步确认确实是systemd-udevd执行了synthesize_change操作,可以打开systemd-udevd的debug日志。根据代码,会产生synthesising partition这样的日志:

/* systemd-219 - src/udev/udevd.c */

static int synthesize_change(struct udev_device *dev) {

/* ... skipped ... */

/*

* We have partitions but re-reading the partition table did not

* work, synthesize "change" for the disk and all partitions.

*/

log_debug("device %s closed, synthesising 'change'", udev_device_get_devnode(dev));

strscpyl(filename, sizeof(filename), udev_device_get_syspath(dev), "/uevent", NULL);

write_string_file(filename, "change");

udev_list_entry_foreach(item, udev_enumerate_get_list_entry(e)) {

_cleanup_udev_device_unref_ struct udev_device *d = NULL;

d = udev_device_new_from_syspath(udev, udev_list_entry_get_name(item));

if (!d)

continue;

if (!streq_ptr("partition", udev_device_get_devtype(d)))

continue;

log_debug("device %s closed, synthesising partition '%s' 'change'",

udev_device_get_devnode(dev), udev_device_get_devnode(d));

strscpyl(filename, sizeof(filename), udev_device_get_syspath(d), "/uevent", NULL);

write_string_file(filename, "change");

}

/* ... skipped ... */

}

然后在日志中,确实能找到代码中的信息:

Dec 16 13:32:42 myserver systemd-udevd[291102]: inotify event: 8 for /dev/sdac

Dec 16 13:32:42 myserver systemd-udevd[291102]: device /dev/sdac closed, synthesising 'change'

Dec 16 13:32:42 myserver systemd-udevd[291102]: device /dev/sdac closed, synthesising partition '/dev/sdac1' 'ch

Dec 16 13:32:42 myserver systemd-udevd[291102]: device /dev/sdac closed, synthesising partition '/dev/sdac9' 'ch

解决方案

如何解决这个问题?由于mvcli没有开源,不太可能通过源码去修改。但通过strace分析,可以看到mvcli先创建 /tmp/diskList文件,然后读这个文件,再去做open和scsi INQUIRY。猜测程序是按/tmp/diskList文件中的设备,挨个去执行scsi INQUIRY。

110746 14:58:49.231097 execve("/bin/sh", ["sh", "-c", "ls /dev/sd* 2>/dev/null | grep '[^0-9]$' > /tmp/diskList"], [/* 29 vars */]) = 0 <0.000171>

110725 14:58:49.280045 open("/tmp/diskList", O_RDONLY) = 4 <0.000012>

110725 14:58:49.280076 fstat(4, {st_mode=S_IFREG|0644, st_size=1664, ...}) = 0 <0.000009>

110725 14:58:49.280103 mmap(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7fb84ebe0000 <0.000011>

110725 14:58:49.280132 read(4, "/dev/sda\n/dev/sdaa\n/dev/sdab\n/dev/sdac\n/dev/sdad\n/dev/sdae\n/dev/sdaf\n/dev/sdag\n/dev/sdah\n/dev/sdai\n/dev/sdaj\n/dev/sdak\n/dev/sdal\n/dev/sdam\n/dev/sdan\n/dev/sdao\n/dev/sdap\n/dev/sdaq\n/dev/sdar\n/dev/sdas\n/dev/sdat\n/dev/sdau\n/dev/sdav\n/dev/sdaw\n/dev/sdax\n/dev/sday\n/dev/sdaz\n/dev/sdb\n/dev/sdba\n/dev/sdbb\n/dev/sdbc\n/dev/sdbd\n/dev/sdbe\n/dev/sdbf\n/dev/sdbg\n/dev/sdbh\n/dev/sdbi\n/dev/sdbj\n/dev/sdbk\n/dev/sdbl\n/dev/sdbm\n/dev/sdbn\n/dev/sdbo\n/dev/sdbp\n/dev/sdbq\n/dev/sdbr\n/dev/sdbs\n/dev/sdbt\n/dev/sdbu\n/dev/sdbv\n/dev/sdbw\n/dev/sdbx\n/dev/sdby\n/dev/sdbz\n/dev/sdc\n/dev/sdca\n/dev/sdcb\n/dev/sdcc\n/dev/sdcd\n/dev/sdce\n/dev/sdcf\n/dev/sdcg\n/dev/sdch\n/dev/sdci\n/dev/sdcj\n/dev/sdck\n/dev/sdcl\n/dev/sdcm\n/dev/sdcn\n/dev/sdco\n/dev/sdcp\n/dev/sdcq\n/dev/sdcr\n/dev/sdcs\n/dev/sdct\n/dev/sdcu\n/dev/sdcv\n/dev/sdcw\n/dev/sdcx\n/dev/sdcy\n/dev/sdcz\n/dev/sdd\n/dev/sdda\n/dev/sddb\n/dev/sddc\n/dev/sddd\n/dev/sdde\n/dev/sddf\n/dev/sddg\n/dev/sddh\n/dev/sddi\n/dev/sddj\n/dev/sddk\n/dev/sddl\n/dev/sddm\n/dev/sddn\n/dev/sddo\n/dev/sddp\n/dev/sddq\n/dev/sddr\n/dev/sdds\n/dev/sddt\n/dev/sddu\n/dev/sddv\n/dev/sddw\n/dev/sddx\n/dev/sddy\n/dev/sddz\n/dev/sde\n/dev/sdea\n/dev/sdeb\n/dev/sdec\n/dev/sded\n/dev/sdee\n/dev/sdef\n/dev/sdeg\n/dev/sdeh\n/dev/sdei\n/dev/sdej\n/dev/sdek\n/dev/sdel\n/dev/sdem\n/dev/sden\n/dev/sdeo\n/dev/sdep\n/dev/sdeq\n/dev/sder\n/dev/sdes\n/dev/sdet\n/dev/sdeu\n/dev/sdev\n/dev/sdew\n/dev/sdex\n/dev/sdey\n/dev/sdez\n/dev/sdf\n/dev/sdfa\n/dev/sdfb\n/dev/sdfc\n/dev/sdfd\n/dev/sdfe\n/dev/sdff\n/dev/sdfg\n/dev/sdfh\n/dev/sdfi\n/dev/sdfj\n/dev/sdfk\n/dev/sdfl\n/dev/sdfm\n/dev/sdg\n/dev/sdh\n/dev/sdi\n/dev/sdj\n/dev/sdk\n/dev/sdl\n/dev/sdm\n/dev/sdn\n/dev/sdo\n/dev/sdp\n/dev/sdq\n/dev/sdr\n/dev/sds\n/dev/sdt\n/dev/sdu\n/dev/sdv\n/dev/sdw\n/dev/sdx\n/dev/sdy\n/dev/sdz\n", 4096) = 1664 <0.000015>

由于Marvell raid只管理系统盘,在我们的系统中是/dev/sdfm,删除其他设备后,把/tmp/diskList文件用chattr命令加上不可更改属性,再去测试mvcli可以正常返回结果,无重读分区表的日志。打开zfs-zed服务,也没有再报vdev.bad_ashift错误。

没有源代码的情况下,禁止/tmp/diskList文件的修改是否会影响其他命令选项的使用是不确定的,所以为了减少影响,可以在监控脚本对mvcli进行封装,在执行前修改/tmp/diskList文件内容并加不可更改属性,执行后再改回来即可。

其他信息

mvcli会去扫描/sys/class/scsi_generic下面的sg设备,找到Marvell设备后,通过ioctl去收发scsi命令。

384462 23:57:03.837807 open("/sys/class/scsi_generic/sg179/device/vendor", O_RDONLY) = 4 <0.000021>

384462 23:57:03.837846 fstat(4, {st_mode=S_IFREG|0444, st_size=4096, ...}) = 0 <0.000009>

384462 23:57:03.837878 mmap(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7f94a2903000 <0.000009>

384462 23:57:03.837904 read(4, "Marvell \n", 4096) = 9 <0.000011>

384462 23:57:03.837933 close(4) = 0 <0.000009>

384462 23:57:03.837958 munmap(0x7f94a2903000, 4096) = 0 <0.000010>

384462 23:57:03.837985 open("/sys/class/scsi_generic/sg179/device/model", O_RDONLY) = 4 <0.000017>

384462 23:57:03.838022 fstat(4, {st_mode=S_IFREG|0444, st_size=4096, ...}) = 0 <0.000009>

384462 23:57:03.838049 mmap(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7f94a2903000 <0.000009>

384462 23:57:03.838075 read(4, "Console \n", 4096) = 17 <0.000010>

384462 23:57:03.838104 close(4) = 0 <0.000009>

384462 23:57:03.838129 munmap(0x7f94a2903000, 4096) = 0 <0.000010>

384462 23:57:03.838156 getdents(3, /* 0 entries */, 32768) = 0 <0.000010>

384462 23:57:03.838183 close(3) = 0 <0.000010>

384462 23:57:03.838209 stat("/dev/sg179", {st_mode=S_IFCHR|0600, st_rdev=makedev(21, 179), ...}) = 0 <0.000012>

384462 23:57:03.838242 open("/dev/sg179", O_RDWR) = 3 <0.000017>

384462 23:57:03.838284 ioctl(3, SG_IO, {'S', SG_DXFER_FROM_DEV, cmd[6]=[12, 00, 00, 00, ff, 00], mx_sb_len=32, iovec_count=0, dxfer_len=255, timeout=20000, flags=0, data[255]=[03, 80, 05, 32, 27, 00, 00, 00, 4d, 61, 72, 76, 65, 6c, 6c, 20, 43, 6f, 6e, 73, 6f, 6c, 65, 20, 20, 20, 20, 20, 20, 20, 20, 20, 31, 2e, 30, 31, 00, 80, 00, 00, 4d, 52, 56, 4c, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 02, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 03, 00, 00, 00, 00, 01, 01, 00, 34, 62, 95, 00, 00, 00, 00, 00, 00, 60, d3, 36, 97, 8b, ff, ff, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 00, 30, 00, 00, 00, 00, 00, 00, 00, 10, 00, 00, 00, 00, 00, 00], status=00, masked_status=00, sb[0]=[], host_status=0, driver_status=0, resid=0, duration=0, info=0}) = 0 <0.000068>

mvcli命令主要的时间消耗在是pvdisplay,在strace记录中共花了18.8秒,搞不懂为什么会有这个需求。估计把lvm软件删除后命令就可以很快完成。

385141 23:57:03.932932 execve("/usr/sbin/pvdisplay", ["pvdisplay", "-c"], [/* 27 vars */]) = 0 <0.000169>

384999 23:57:22.731182 <... wait4 resumed> [{WIFEXITED(s) && WEXITSTATUS(s) == 0}], 0, NULL) = 385141 <18.798689>

- zfs on linux是通过ZED+FM(Fault Management)来实现disk-add、disk-change等事件的自动处理的,具体见Fault Management Logic for ZED。